The Factorization Curse: Which Tokens You Predict Underlie the Reversal Curse and More

Abstract

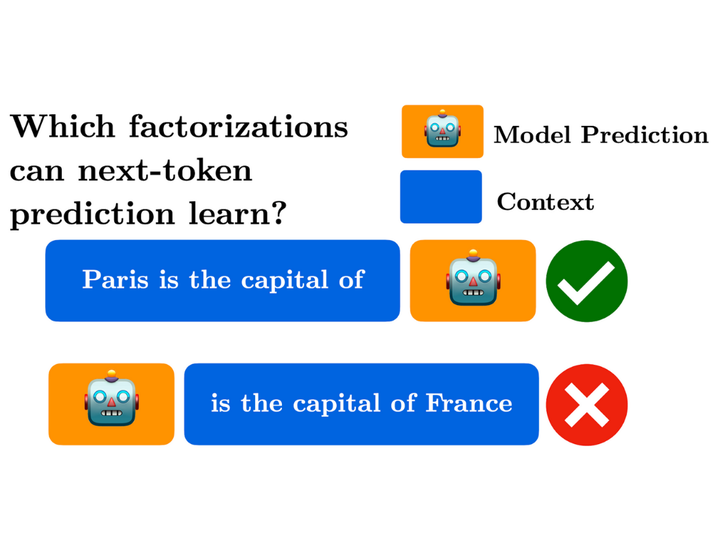

Left-to-right autogressive transformers have shown impressive capabilities but still suffer from fundamental isses which stem from their inability to use their training data perfectly. We argue that LLMs can absorb a lot more information from a given training sample provided they are trained with stronger objectives than next token prediction. We propose factorization training as a fix for the reversal curse and show that it can encourage transformers to learn how to plan much better than the standard next token objective. The key insight is that training models to predict many tokens ahead (or many tokens backward) can unlock new capabilities and solve some of the limitations of left-to-right transformers.

Type